Note that when viewing the document, you only see the words that have been kept for indexing by the LDA model (when running the dot script above). In this example, we only see words tagged as nouns.

LemLDA is a topic modeling software package that implements Latent Dirichlet Allocation (LDA) for Hebrew.

The package is based on Heinrich's java implementation of collapsed Gibbs sampling with an extra variable to model the generative nature of lemmas in Hebrew.

LemLDA is based on preprocessing with the Morphological Disambiguator (Tagger) by Adler et al.:

LemLDA is free software: you can redistribute it and/or modify

it under the terms of the GNU General Public License as published by

the Free Software Foundation, either version 3 of the License, or

(at your option) any later version.

This program is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

GNU General Public License for more details.

See the GNU General Public License.

wget http://www.cs.bgu.ac.il/~elhadad/nlpproj/lemlda.zip unzip lemlda.zip

% cd tagger

% tag

Usage: tag [corpusIn] [corpusOut]

Example: tag ..\corpus ..\corpusTagged

-

This will iterate over text files found in [corpusIn]

and perform morphological tagging on each file.

The tagged files will be placed in [corpusOut] in the format

of one word per line followed by a number which is a compact encoding as

a bitmask of the tag. The tag includes the Part of speech of the word,

its segmentation (in case of prefixes like ha- and bklm, mshvklv) and

its morphological properties (number, gender, tense, person etc).

The tagged files are encoded in UTF8.

-

java must be in your path.

% tag ..\corpus ..\corpusTagged

java.lang.StringIndexOutOfBoundsException: String index out of range: -83If this happens, you should pre-process your text using the following tokenizer before you apply the tag command: hebtokenizer.

% dot

Usage: dot [corpusTagged] [corpusTaggedDotted] [model:-token/-word/-lemma]

Example: dot ..\corpusTagged ..\corpusTaggedDotted -lemma

-

This will iterate over text files found in [corpusTagged]

and add vocalization (nikud) to each tagged word in each file.

The dotted files will be placed in [corpusTaggedDotted] in the format

of one word per line followed by its vocalized version.

The dotted files are encoded in UTF8.

-

The files in corpusTagged must have been produced by tag.

-

The model can be either: -token, -word or -lemma

If your text can be reasonably well handled by the tagger use -lemma,

otherwise choose -token

-

java must be in your path.

% dot ..\corpusTagged ..\corpusTaggedDotted -token

% cd lemlda-0.1 [Linux] % make train TOPICS= <topic number> DOCS_DIR=<in directory> DOCS_PAT=<input files pattern> OUT=<out> [Windows] % train Usage: train [topics] [docsDir] [outPath] Example: train 5 ..\corpusTaggedDotted ..\lda\model - java and python must be in your path. - topics is the desired number of topics to be learned by the LDA algorithm. - docsDir must be a full path where the documents encoded in UTF8 must be located. train will iterate over files with extension txt. The files must have been tagged and dotted using tag and dot. - outPath must be a full path to a file (for example model). train will generate several files in this folder whose name starts with the prefix given. for example model.dat, model.doc etc. - % train 5 ..\corpusTaggedDotted ..\lda\modelwhere:

[Linux]

% python python/result_browser.py 8080 <model>

[Windows]

% browse

Usage: browse [modelPath] [port]

Example: browse ..\lda\model 8080

-

This lauches an HTTP server that allows you to browse the documents

in your corpus by topic and the topics.

-

- modelPath refers to a model created by "train".

It must point to a path with the prefix name of the model.

- port is the TCP port on which the web browser will listen.

-

python must be in your path.

-

% browse ..\lda\model 8080

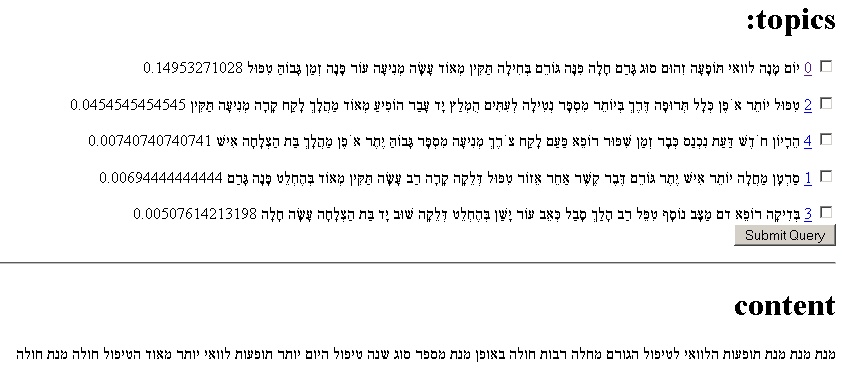

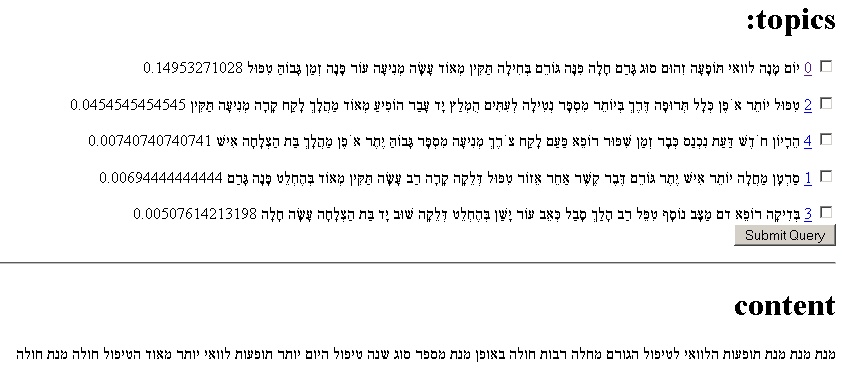

Consult http://localhost:8080/topics/

http://0.0.0.0:8080/

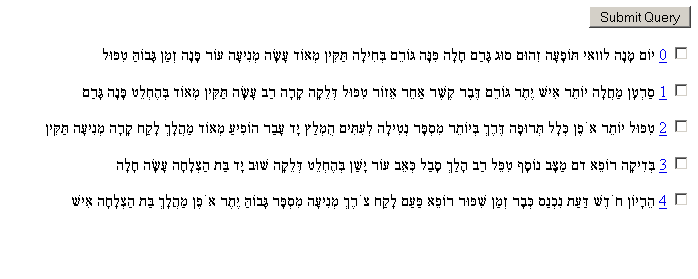

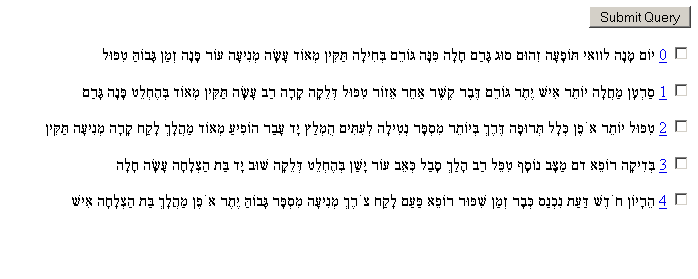

The topic exploration proceeds as follows:

Note that when viewing the document, you only see the words that have been kept for indexing by the LDA model (when running the dot script above). In this example, we only see words tagged as nouns.