-

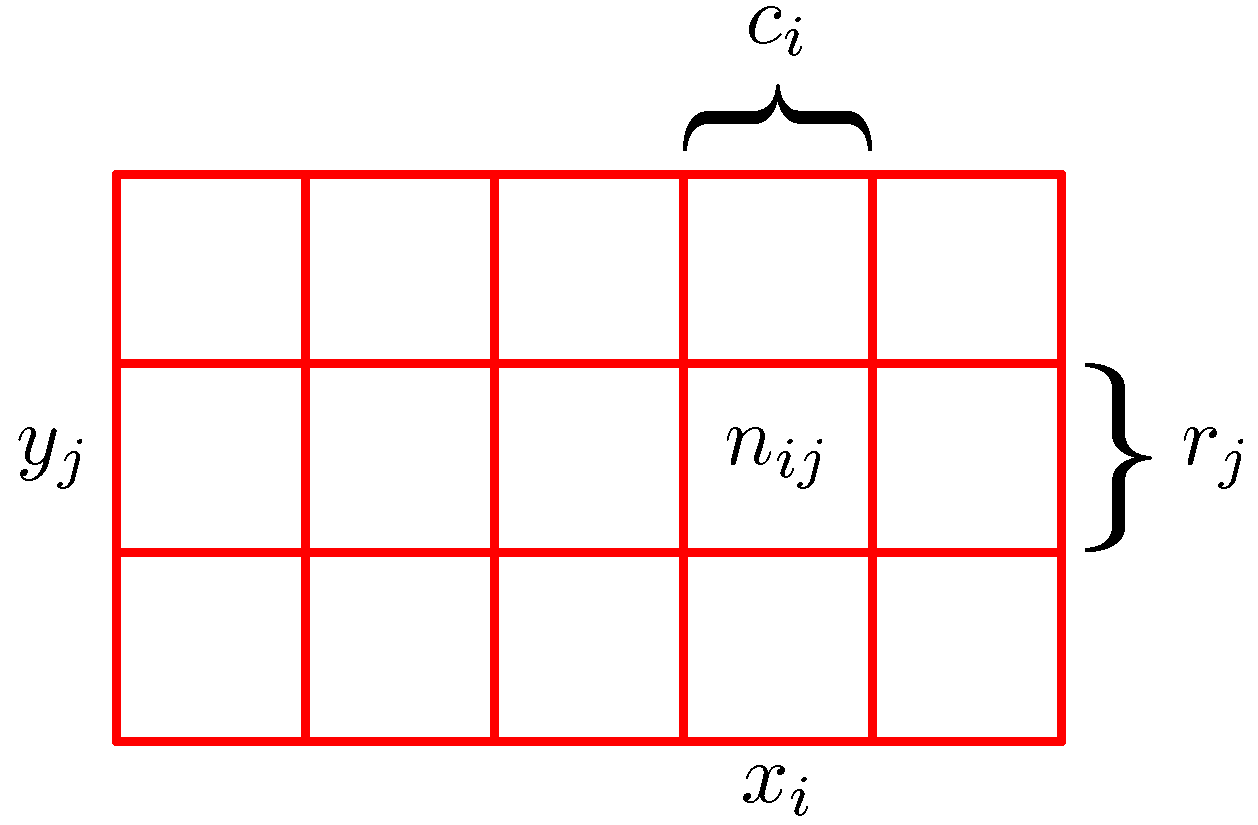

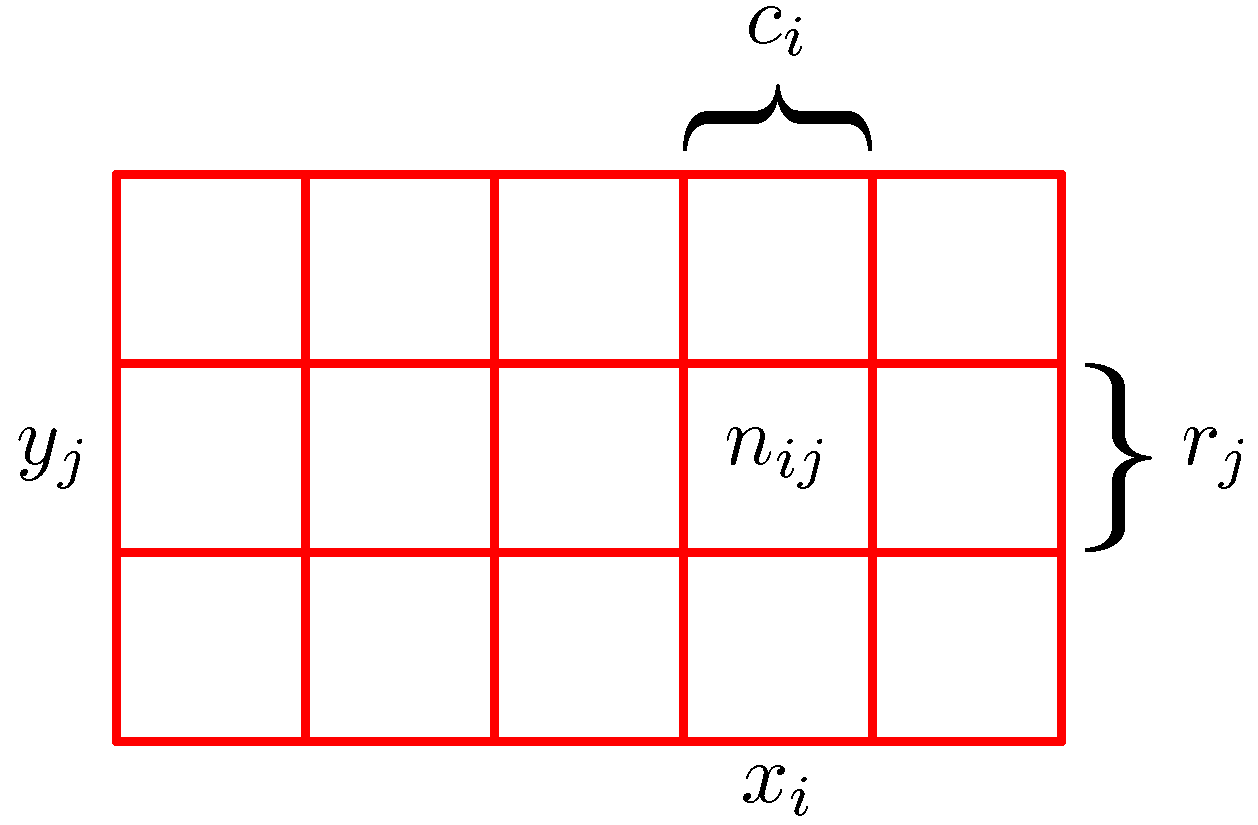

Consider a distribution over two discrete variables x, y displayed in the following figure:

.

.

Provide formulas to compute:

Joint probability:

Marginal probability for x:

Conditional probability given y:

-

What is the criterion that defines that two random variables X and Y are independent using conditional probability:

-

Consider a distribution object as a software engineering pure interface. List 5 methods that can be computed over a distribution with their signature:

-

Given a distribution p with parameters w, and an observed dataset D, the Bayes formula states that:

p(w | D) = p(D | w) p(w) / p(D)

Indicate the definition of the following terms:

Posterior:

Prior:

Likelihood:

-

Indicate which formula is optimized for each of the following two estimation methods:

Maximum Likelihood Estimator (MLE): w* =

Maximum a posteriori estimator (MAP): w* =

-

Bayesian estimation differs from MLE and MAP because it does not provide a pointwise estimator of the parameters of a distribution given a dataset.

What does it do instead? How can Bayesian estimation be used to perform prediction?

.

.